How to Scrape Wikipedia Pages to Create a Question-Answer Database? : Gábor Madarász

by: Gábor Madarász

blow post content copied from Be on the Right Side of Change

click here to view original post

Why Create a QA Database?

A good question often opens up new perspectives and new ways of thinking. But that’s not why I had to create a Question-Answering database.

Question-Answering (QA) databases play an important role in researching and developing large language models (LLMs). These databases have two primary applications:

- As training material

- To evaluate results

Traditionally, QA databases can be produced in two main ways:

- Manual compilation: experts or volunteers collect questions and answers, ensuring high quality and accuracy. (But slow and expensive)

- Crowdsourcing: using the public to collect questions and answers. (Quicker but less quality)

However, with today’s modern (Instruction Tuned) language models, it is possible to quickly generate high-quality databases at a low cost.

I could not find a suitable database in my language (Hungarian), so I had to create one.

In this article, I am creating a question-answer database based on Wikipedia pages determined by keywords. For this, I am using the OpenAI GPT-3.5 turbo model and the Wikipedia Python module.

How I Did It

After loading the necessary modules and setting the logging level (level=logging.DEBUG reports detailed information about the system’s operation, set this to "logging.INFO" if you don’t need this level of monitoring), we set up the Openai API.

Do not forget to provide your API key!

I statically encoded the instruction into the “messages” variable and everyone should adapt it to their own language. It is also worth trying different prompts for the best results.

I used this one: "Write 1 relevant question in Hungarian about the following text!"

# GPT 3.5 Turbo API Client

class GPT3ChatClient:

def __init__(self, api_key='sk-YOUR_API_KEY'):

self.api_key = api_key

self.headers = {

"Authorization": f"Bearer {self.api_key}",

"Content-Type": "application/json"

}

self.endpoint = "https://api.openai.com/v1/chat/completions"

def query(self, query_string, max_tokens=100, temperature=0.5, **kwargs):

messages = [{"role": "user", "content": "Írj 1 releváns magyar nyelvű kérdést a következő szövegről! " + query_string}] #You have to customize "content" to your language!

payload = {

"model": "gpt-3.5-turbo",

"messages": messages,

"max_tokens": max_tokens,

"temperature": temperature,

**kwargs

}

response = requests.post(self.endpoint, json=payload, headers=self.headers)

response_data = response.json()

return response_data['choices'][0]['message']['content'] if response_data.get('choices') else None

I have defined a function that transforms Hungarian accented characters, which will be necessary for automatic file name generation. (It’s completely optional!)

It uses the str.replace(char, new_char) function on a predefined dictionary of characters.

# Utility function for string replacement

def replace_accented_chars(input_str):

replacements = {

'á': 'a', 'é': 'e', 'í': 'i', 'ó': 'o', 'ö': 'o',

'ő': 'o', 'ú': 'u', 'ü': 'u', 'ű': 'u', ' ': '_'

}

for accented_char, replacement in replacements.items():

input_str = input_str.replace(accented_char, replacement)

return input_str.lower()

The following function performs Wikipedia scraping with attention to the “results=2” parameter, which determines how many pages to search based on the keyword.

The second argument of the function (language="hu") is the language code. Set prefix to one of the two letter prefixes on the list of all Wikipedias.

I have integrated error handling into the function, and it uses “random.choice” to randomly select a page when a keyword would raise a DisambiguationError if the page is a disambiguation page.

The wikipedia.search() function has a “suggestion” argument, if True, it returns the results and suggestion (if any) in a tuple. I have not used this.

# Wikipedia scraping based on keywords

def scrape_wikipedia(keyword, language="hu"):

wikipedia.set_lang(language)

merged_text = []

result = wikipedia.search(keyword, results=2, suggestion=False)

for item in result:

try:

page = wikipedia.page(item, auto_suggest=False)

except wikipedia.DisambiguationError as e:

selected_option = random.choice(e.options)

logging.debug(f"DisambiguationError: {selected_option}, {e}")

page = wikipedia.page(selected_option)

content = page.content

merged_text.append(content)

return merged_text

Defining some basic text cleaning:

In this section, I filter out non-alphanumeric (Hungarian) characters and replace the "\n" (newline) characters with spaces. To filter out short sentences, we only keep sentences longer than 5 words (Feel free to customize it!).

For an advanced LLM, it is advisable to provide a relatively longer context; this helps in generating better questions.

# Cleaning data

def clean_text(string_list):

allowed_chars = re.compile('[a-zA-ZáÁéÉíÍóÓöÖőŐúÚüÜűŰ\s.:;,%?!0-9-]') #Allow only hungarian characters

clean_list = [''.join(allowed_chars.findall(string.replace("\n", " "))) for string in string_list if len(string.split()) > 5]

return clean_list

After that we initialize the GPT-3.5 ChatClient using an API key.

The API key grants access to the GPT service. We load a Spacy language processing model for the Hungarian language.

“hu_core_news_lg” is a pretrained model for the Hungarian language. Check if the Spacy model has a “sentencizer” factory, which is responsible for sentence splitting.

# Initialize GPT client and Spacy model

# api_key='sk-YOUR_API_KEY'

gpt_client = GPT3ChatClient(api_key)

nlp = spacy.load("hu_core_news_lg")

assert nlp.has_factory("sentencizer")

I break down these pages into sentences using huSpacy’s CNN-based large model, but you can also get acceptable results using the following regex code (if no spacy model is available):

# Regular expression pattern for splitting text into sentences

def split_list_into_sentences(text_list):

pattern = r'(?<!\w\.\w.)(?<![A-Z][a-z]\.)(?<=\.|\?|!)\s'

sentences_list = []

for text in text_list:

sentences = re.split(pattern, text)

sentences_list.extend(sentences)

return sentences_list

Here, we set the keyword that we’ll use later to search for Wikipedia pages:

#Set the keyword for wikipedia search keyword = "OpenAI"

After that,

- We use the

scrape_wikipediafunction to fetch Wikipedia pages based on the provided keyword. Theclean_textfunction is used to clean and preprocess the retrieved text. doc = nlp(str(cleaned_text)): We use Spacy to process the cleaned text, splitting it into individual sentences for further analysis.data = {...}: We create a data table that contains the processed sentences and queries to be asked to GPT-3.5. The queries are the sentences that we’ll ask ChatGPT about. This line creates a dictionary with two keys:'Query'and'Answer'. The values are lists generated using list comprehension.

'Query': In this part of the code, agpt_clientobject is used, and the query function is called for each sentence (sent.text) in the processed document (doc.sents).

The result is a list containing questions from the LLM.

'Answer': In this part of the code, each sentence fromdoc.sents, which was processed earlier, is simply inserted into a list. The result is a list containing all the sentences.df = pd.DataFrame(data): We create a Pandas DataFrame based on the data table created in the previous step.print(f"{len(df)} questions generated."): We print the number of generated questions.

In a corresponding prompt (You have to customize it for your language!), I generated questions for these answers, and then put the data into a DataFrame, from where it can be saved as a file and sent to, for example, human annotators or used for various projects.

Such a project could be, for example, an evaluation of a model’s “factual” knowledge on a given topic. By running through the generated questions, the existing “gold” answers can be compared with the answers given by the model.

Another, more fun use of the database could be, for example, to quickly set up quiz games.

For example, the DataFrame can be exported to an xlsx file to a given path:

# Write result to excel file

output_folder = "./results"

if not os.path.exists(output_folder): # create “results” folder if not exist

os.makedirs(output_folder)

filename = replace_accented_chars(keyword)

df.to_excel(f"{output_folder}/{filename}.xlsx")

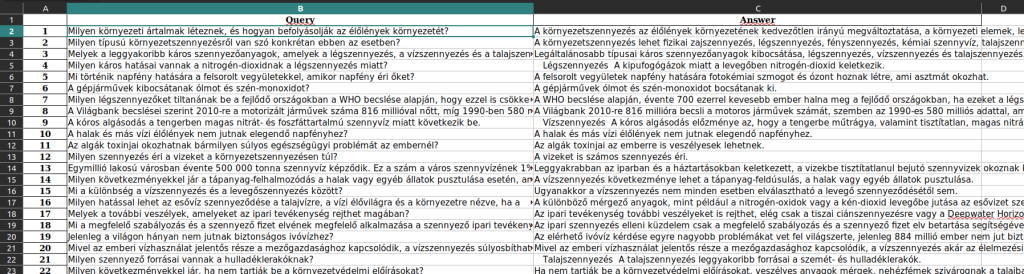

The result xlsx:

Conclusion

The created database contains approximately clean and relevant questions but, of course, it can be further refined (and is worth refining) with human effort, but this already involves much less effort than coming up with the questions from scratch.

Further improvement of the results can be achieved with additional prompting techniques, for example, by providing a system role to guide the language model toward the specific topic.

With the rise of large language models, many tasks are becoming faster, and considering that these models truly excel when working with texts, efficient code can be crafted for such work.

Full Code (Appendix)

Here is the full code:

#!/usr/bin/env python

# coding: utf-8

"""

Q&A Generator with GPT3.5-turbo from Wikipedia pages based on keywords.

"""

# Required libraries

import logging

import sys

import requests

import wikipedia

import random

import warnings

import re

import spacy

import huspacy

import pandas as pd

import os

# Logging configuration

logging.basicConfig(stream=sys.stdout, level=logging.DEBUG)

# GPT 3.5 Turbo API Client

class GPT3ChatClient:

def __init__(self, api_key='sk-YOUR_API_KEY'):

self.api_key = api_key

self.headers = {

"Authorization": f"Bearer {self.api_key}",

"Content-Type": "application/json"

}

self.endpoint = "https://api.openai.com/v1/chat/completions"

def query(self, query_string, max_tokens=100, temperature=0.5, **kwargs):

messages = [{"role": "user", "content": "Írj 1 releváns magyar nyelvű kérdést a következő szövegről! " + query_string}] #You have to customize "content" to your language

payload = {

"model": "gpt-3.5-turbo",

"messages": messages,

"max_tokens": max_tokens,

"temperature": temperature,

**kwargs

}

response = requests.post(self.endpoint, json=payload, headers=self.headers)

response_data = response.json()

return response_data['choices'][0]['message']['content'] if response_data.get('choices') else None

# Utility function for string replacement

def replace_accented_chars(input_str):

replacements = {

'á': 'a', 'é': 'e', 'í': 'i', 'ó': 'o', 'ö': 'o',

'ő': 'o', 'ú': 'u', 'ü': 'u', 'ű': 'u', ' ': '_'

}

for accented_char, replacement in replacements.items():

input_str = input_str.replace(accented_char, replacement)

return input_str.lower()

# Wikipedia scraping based on keywords

def scrape_wikipedia(keyword, language="hu"):

wikipedia.set_lang(language)

merged_text = []

result = wikipedia.search(keyword, results=2, suggestion=False)

for item in result:

try:

page = wikipedia.page(item, auto_suggest=False)

except wikipedia.DisambiguationError as e:

selected_option = random.choice(e.options)

logging.debug(f"DisambiguationError: {selected_option}, {e}")

page = wikipedia.page(selected_option)

content = page.content

merged_text.append(content)

return merged_text

# Cleaning data

def clean_text(string_list):

allowed_chars = re.compile('[a-zA-ZáÁéÉíÍóÓöÖőŐúÚüÜűŰ\s.:;,%?!0-9-]')

clean_list = [''.join(allowed_chars.findall(string.replace("\n", " "))) for string in string_list if len(string.split()) > 5]

return clean_list

# Initialize GPT client and Spacy model

gpt_client = GPT3ChatClient(api_key='sk-') #api_key='sk-YOUR_API_KEY'

nlp = spacy.load("hu_core_news_lg")

assert nlp.has_factory("sentencizer")

# Process keyword

keyword = "OpenAI"

cleaned_text = clean_text(scrape_wikipedia(keyword, language="hu")) #Set the language code from here https://meta.wikimedia.org/wiki/List_of_Wikipedias

# Sentence splitting and DataFrame creation

doc = nlp(str(cleaned_text))

data = {

'Query': [gpt_client.query(sent.text) for sent in doc.sents],

'Answer': [sent.text for sent in doc.sents]

}

df = pd.DataFrame(data)

print(f"{len(df)} questions generated.")

# Write result to excel file

output_folder = "./results"

if not os.path.exists(output_folder): # create “results” folder if not exist

os.makedirs(output_folder)

filename = replace_accented_chars(keyword)

df.to_excel(f"{output_folder}/{filename}.xlsx")

# Optional file formats

#df.to_csv(f"{output_folder}/{filename}.csv")

#df.to_json(f"{output_folder}/{filename}.json")

December 19, 2023 at 05:59PM

Click here for more details...

=============================

The original post is available in Be on the Right Side of Change by Gábor Madarász

this post has been published as it is through automation. Automation script brings all the top bloggers post under a single umbrella.

The purpose of this blog, Follow the top Salesforce bloggers and collect all blogs in a single place through automation.

============================

Post a Comment