Solving Ordinary Least Squares Using QR Decomposition : Puneet Mangla

by: Puneet Mangla

blow post content copied from PyImageSearch

click here to view original post

Table of Contents

- Solving Ordinary Least Squares Using QR Decomposition

- Overview of Ordinary Least Squares

- What Is QR Decomposition?

- Gram-Schmidt Process for QR Factorization

- QR Factorization for Least Squares Regression

- Transforming the Equations of Ordinary Least Squares Regression

- Solving the Transformed Equations

- Solving Ordinary Least Squares Using QR Factorization

- Summary

Solving Ordinary Least Squares Using QR Decomposition

In this tutorial, you will learn how to solve ordinary least squares regression using QR decomposition.

Ordinary Least Squares (OLS) is a fundamental method in statistics and machine learning for estimating the unknown parameters in a linear regression model. It provides the best linear unbiased estimator (BLUE) by minimizing the sum of the squared differences between the observed and predicted values. However, as datasets grow in size and complexity, ensuring numerical stability and efficiency in computations becomes paramount.

This is where QR Decomposition, a matrix factorization technique, comes into play. QR Decomposition allows us to transform the original problem into a more manageable form by decomposing the matrix of predictors into an orthogonal matrix  and an upper triangular matrix

and an upper triangular matrix  . This transformation not only enhances computational efficiency but also improves the numerical stability of the solution.

. This transformation not only enhances computational efficiency but also improves the numerical stability of the solution.

In this blog post, we will explore the theory behind QR Decomposition and its application in solving the OLS regression problem. Through detailed explanations and practical examples, you will gain a deeper understanding of how QR Decomposition can be leveraged to make linear regression more robust and efficient.

This lesson is the last in a 3-part series on Matrix Factorization:

- Solving System of Linear Equations with LU Decomposition

- Diagonalize Matrix for Data Compression with Singular Value Decomposition

- Solving Ordinary Least Squares Using QR Decomposition (this tutorial)

To learn how to solve ordinary least squares regression using QR decomposition, just keep reading.

Overview of Ordinary Least Squares

Ordinary Least Squares (OLS) is one of the popular and widely adopted methods of estimating the unknown parameters in a linear regression model. Linear regression aims to model the relationship between a dependent variable  and one or more independent variables

and one or more independent variables  by fitting a linear equation to observed data.

by fitting a linear equation to observed data.

The OLS method achieves this by minimizing the sum of the squared differences between the observed values and the values predicted by the linear model, hence the name “least squares.”

The Linear Regression Model

The linear regression model (Figure 1) can be expressed as follows:

where:

: the vector of observed values (dependent variable).

: the vector of observed values (dependent variable). : the matrix of observed values of the independent variables (also called the design matrix).

: the matrix of observed values of the independent variables (also called the design matrix). : the vector of unknown parameters to be estimated.

: the vector of unknown parameters to be estimated. : the vector of errors or residuals.

: the vector of errors or residuals.

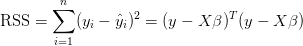

The goal of OLS is to find the parameter vector  that minimizes the residual sum of squares (RSS), which is defined as follows:

that minimizes the residual sum of squares (RSS), which is defined as follows:

where  represents the predicted values.

represents the predicted values.

Solving the Ordinary Least Squares Problem

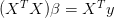

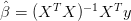

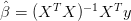

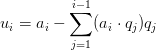

To solve for  , we set up the normal equations derived from simplifying the RSS minimization:

, we set up the normal equations derived from simplifying the RSS minimization:

The solution to these equations gives us the OLS estimator for  :

:

Directly computing the OLS estimator  can be challenging due to numerical instability, computational complexity, and significant memory usage, especially for large or ill-conditioned matrices.

can be challenging due to numerical instability, computational complexity, and significant memory usage, especially for large or ill-conditioned matrices.

QR decomposition addresses these issues by decomposing  into an orthogonal matrix

into an orthogonal matrix  and an upper triangular matrix

and an upper triangular matrix  , enabling more stable and efficient computations. Instead of directly inverting

, enabling more stable and efficient computations. Instead of directly inverting  , QR decomposition transforms the problem into solving a simpler upper triangular system using back substitution (similar to an LU decomposition), thereby enhancing both numerical stability and computational efficiency.

, QR decomposition transforms the problem into solving a simpler upper triangular system using back substitution (similar to an LU decomposition), thereby enhancing both numerical stability and computational efficiency.

What Is QR Decomposition?

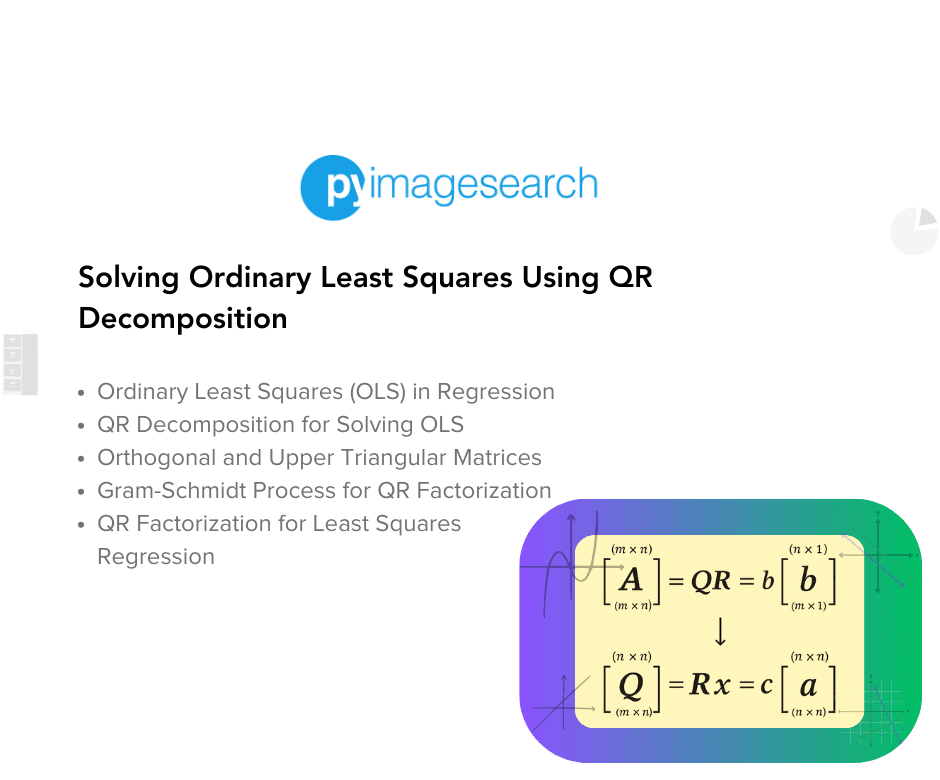

QR decomposition (Figure 2) is a mathematical method used to factorize a matrix into two distinct matrices,  and

and  , each with unique properties that make solving systems of linear equations more efficient and stable. This decomposition transforms a given matrix

, each with unique properties that make solving systems of linear equations more efficient and stable. This decomposition transforms a given matrix  into the product of an orthogonal matrix

into the product of an orthogonal matrix  and an upper triangular matrix

and an upper triangular matrix  , such that

, such that

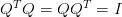

Orthogonal Matrix Q

An orthogonal matrix  (Figure 3) is a square matrix whose columns (or rows) are orthonormal vectors. This means that the dot product of any two distinct columns (or rows) of

(Figure 3) is a square matrix whose columns (or rows) are orthonormal vectors. This means that the dot product of any two distinct columns (or rows) of  is

is 0, and the norm of each column (or row) is 1.

(source: Linearly AI).

(source: Linearly AI).Mathematically, a matrix  is orthogonal if:

is orthogonal if:

where  is the transpose of

is the transpose of  , and

, and  is the identity matrix. The orthogonality property of

is the identity matrix. The orthogonality property of  ensures numerical stability and simplifies computations involving matrix inversion.

ensures numerical stability and simplifies computations involving matrix inversion.

Upper Triangular Matrix R

The matrix  is an upper triangular matrix, meaning that all the elements below the main diagonal are zero. The elements on and above the main diagonal can be non-zero. This triangular structure of

is an upper triangular matrix, meaning that all the elements below the main diagonal are zero. The elements on and above the main diagonal can be non-zero. This triangular structure of  makes it straightforward to solve systems of linear equations using back substitution (recall Lesson 1, where we saw how upper triangular matrices can help solve linear equations using back substitution).

makes it straightforward to solve systems of linear equations using back substitution (recall Lesson 1, where we saw how upper triangular matrices can help solve linear equations using back substitution).

Gram-Schmidt Process for QR Factorization

The Gram-Schmidt algorithm (Figure 4) is a method for obtaining the QR factorization of a matrix by orthogonalizing its columns. Here’s how it works:

Gram-Schmidt Algorithm

Given a matrix  with columns

with columns  , the goal is to decompose

, the goal is to decompose  into an orthogonal matrix

into an orthogonal matrix  and an upper triangular matrix

and an upper triangular matrix  .

.

Initialization

The first step is to start with the first column of  ,

,  . Set

. Set

where  is the Euclidean norm of

is the Euclidean norm of  . This ensures

. This ensures  , which is the first column of our

, which is the first column of our  matrix, is a unit vector — as per the definition.

matrix, is a unit vector — as per the definition.

Orthogonalization

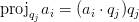

This step (Figure 5) involves transforming each subsequent column  (where

(where  ) such that they are orthonormal to all previously transformed columns

) such that they are orthonormal to all previously transformed columns  (where

(where  ):

):

First, we compute the projection of  onto each of the previously computed orthonormal vectors

onto each of the previously computed orthonormal vectors  for

for  . The projection is given by:

. The projection is given by:

.

.

Subtract these projections from  to obtain the orthogonal component

to obtain the orthogonal component  :

:

Normalize  to obtain the next orthonormal vector

to obtain the next orthonormal vector  :

:

(source: The Palindrome).

(source: The Palindrome).Forming Matrices

Now that we have computed all orthonormal vectors  , we can begin by forming matrices

, we can begin by forming matrices  and

and

- The orthonormal vectors

form the columns of matrix

form the columns of matrix  .

. - The coefficients used in the projections form the elements of the upper triangular matrix

. Specifically,

. Specifically,  is constructed such that:

is constructed such that:

for

for  ,

,

and  for

for  .

.

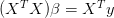

Thus, we obtain the QR decomposition (Figure 6)  , where:

, where:

![Q = [q_1 \, q_2 \, \ldots \, q_n] Q = [q_1 \, q_2 \, \ldots \, q_n]](https://b2633864.smushcdn.com/2633864/wp-content/latex/50d/50dc559c82e12f0ed1c05e6056415b9b-ffffff-000000-0.png?lossy=2&strip=1&webp=1)

is an upper triangular matrix with entries

is an upper triangular matrix with entries  for

for

and

and  matrices (source: The Palindrome).

matrices (source: The Palindrome).Implementing the Gram-Schmidt Algorithm in Python

The code snippet below implements the Gram-Schmidt algorithm to perform the QR decomposition of a matrix. The code uses the NumPy library, which can be installed in your Python environment via pip install numpy.

import numpy as np

def gram_schmidt(A):

"""Perform QR factorization using the Gram-Schmidt process."""

m, n = A.shape

Q = np.zeros((m, n))

R = np.zeros((n, n))

for j in range(n):

v = A[:, j]

# Orthogonalization

for i in range(j):

R[i, j] = np.dot(Q[:, i], A[:, j])

v = v - R[i, j] * Q[:, i]

# Forming Q and R matrices

R[j, j] = np.linalg.norm(v)

Q[:, j] = v / R[j, j]

return Q, R

# Example usage

A = np.array([[1, 2, 4], [3, 4, 7], [5, 6, 8]])

Q, R = gram_schmidt(A)

print("Matrix A:")

print(A)

print("\nMatrix Q:")

print(Q)

print("\nMatrix R:")

print(R)

print("\nVerifying A = QR:")

print(Q @ R)

Output:

Matrix A: [[1 2 4] [3 4 7] [5 6 8]] Matrix Q: [[ 0.16903085 0.89708523 -0.40824829] [ 0.50709255 0.27602622 0.81649658] [ 0.84515425 -0.34503278 -0.40824829]] Matrix R: [[ 5.91607978 7.43735744 10.98700531] [ 0. 0.82807867 2.76026224] [ 0. 0. 0.81649658]] Verifying A = QR: [[1. 2. 4.] [3. 4. 7.] [5. 6. 8.]]

In this Python code, we start by importing numpy for matrix operations (Line 1). In the gram_schmidt function, we initialize zero matrices Q and R to store the orthogonal and upper triangular components (Lines 5-7).

We loop over each column of A, orthogonalize it by subtracting its projections on the previously computed orthonormal vectors, and store the coefficients in R (Lines 9-14). We then normalize the resulting vector to form the next orthonormal vector and store it in R and Q (Lines 17 and 18).

Finally, we return Q and R (Line 20). The example usage demonstrates how we can factorize a sample  matrix and verify

matrix and verify  (Lines 23-33).

(Lines 23-33).

QR Factorization for Least Squares Regression

Transforming the Equations of Ordinary Least Squares Regression

The goal of OLS regression is to estimate the coefficient vector  that minimizes the sum of squared residuals. This leads to the normal equations:

that minimizes the sum of squared residuals. This leads to the normal equations:

To simplify solving the above normal equations, we can leverage QR Factorization to decompose the matrix  into two matrices: an orthogonal matrix

into two matrices: an orthogonal matrix  and an upper triangular matrix

and an upper triangular matrix  . The decomposition is expressed as follows:

. The decomposition is expressed as follows:

where:

: an

: an  orthogonal matrix (

orthogonal matrix ( )

) : an

: an  upper triangular matrix

upper triangular matrix

Using this decomposition, we can rewrite the normal equations as follows:

Solving the Transformed Equations

The transformed system  is simpler to solve (than the original normal equation

is simpler to solve (than the original normal equation  ) because

) because  is an upper triangular matrix, making it suitable for back substitution. The steps are as follows:

is an upper triangular matrix, making it suitable for back substitution. The steps are as follows:

- Compute

: Multiply the transpose of

: Multiply the transpose of  by the vector

by the vector  , yielding a new vector.

, yielding a new vector. - Back Substitution: Solve the upper triangular system

using back substitution. Starting from the last row and moving upward, each variable

using back substitution. Starting from the last row and moving upward, each variable  can be solved sequentially.

can be solved sequentially.

Solving Ordinary Least Squares Using QR Factorization

In the Python code below, we solve an Ordinary Least Squares (OLS) regression problem using QR decomposition.

def ols_qr(X, y):

"""Solve OLS regression using QR decomposition."""

# Perform QR decomposition

Q, R = gram_schmidt(X)

# Compute Q^T * y

Qt_y = np.dot(Q.T, y)

# Solve R * beta = Q^T * y using back substitution

beta = np.zeros(R.shape[1])

for i in reversed(range(R.shape[1])):

beta[i] = (Qt_y[i] - np.dot(R[i, i+1:], beta[i+1:])) / R[i, i]

return beta

# Example usage

X = np.array([[1, 1], [1, 2], [1, 3], [1, 4]])

y = np.array([6, 5, 7, 10])

beta = ols_qr(X, y)

print("Matrix X:")

print(X)

print("\nVector y:")

print(y)

print("\nEstimated coefficients (beta):")

print(beta)

Output:

Matrix X: [[1 1] [1 2] [1 3] [1 4]] Vector y: [ 6 5 7 10] Estimated coefficients (beta): [3.5 1.4]

We start by defining the ols_qr function (Lines 34-47), which takes the design matrix X and the response vector y as inputs. Inside the function, we perform QR decomposition on X using the previously defined gram_schmidt function (Line 37). We then compute  using the dot product of the transpose of

using the dot product of the transpose of Q and y (Line 40).

Next, we solve the triangular system  using back substitution (Lines 43-45). This involves initializing the vector

using back substitution (Lines 43-45). This involves initializing the vector  with zeros and iteratively solving for each coefficient in reverse order, from the last to the first.

with zeros and iteratively solving for each coefficient in reverse order, from the last to the first.

In the example usage (Lines 50-60), we define a simple  design matrix

design matrix X and a corresponding response vector y. We call the ols_qr function to compute the OLS regression coefficients  .

.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: April 2025

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

This blog post tutorial provided a comprehensive guide on solving Ordinary Least Squares (OLS) regression using QR Decomposition, an efficient and numerically stable method of matrix factorization. The post explained the underlying principles of OLS, a method widely used in statistics and machine learning for estimating unknown parameters in a linear regression model. By minimizing the sum of squared differences between observed and predicted values, OLS aims to find the best linear unbiased estimators.

The tutorial delved into the mechanics of QR Decomposition, describing how it breaks down the design matrix into an orthogonal matrix  and an upper triangular matrix

and an upper triangular matrix  . This decomposition simplifies the linear regression problem, enhancing both computational efficiency and numerical stability. The post provided a step-by-step explanation of the Gram-Schmidt process used in QR factorization, illustrated with clear diagrams and a detailed Python code example.

. This decomposition simplifies the linear regression problem, enhancing both computational efficiency and numerical stability. The post provided a step-by-step explanation of the Gram-Schmidt process used in QR factorization, illustrated with clear diagrams and a detailed Python code example.

Through practical examples and theoretical explanations, readers were equipped to implement QR Decomposition in solving the OLS regression, offering a deeper understanding of both the mathematical concepts and their application in real-world scenarios.

The blog concluded with a practical implementation of the QR Decomposition to solve the OLS problem using Python, providing readers with the tools to apply these techniques effectively in their data analysis projects. This tutorial is the final part of a 3-part series on matrix factorization. It is a valuable resource for those looking to enhance their understanding of advanced algebraic techniques in data science.

Citation Information

Mangla, P. “Solving Ordinary Least Squares Using QR Decomposition,” PyImageSearch, P. Chugh, S. Huot, and P. Thakur, eds., 2025, https://pyimg.co/z4vfe

@incollection{Mangla_2025_Solving-Ordinary-Least-Squares-Using-QR-Decomposition,

author = {Puneet Mangla},

title = ,

booktitle = {PyImageSearch},

editor = {Puneet Chugh and Susan Huot and Piyush Thakur},

year = {2025},

url = {https://pyimg.co/z4vfe},

}

To download the source code to this post (and be notified when future tutorials are published here on PyImageSearch), simply enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

The post Solving Ordinary Least Squares Using QR Decomposition appeared first on PyImageSearch.

April 21, 2025 at 06:30PM

Click here for more details...

=============================

The original post is available in PyImageSearch by Puneet Mangla

this post has been published as it is through automation. Automation script brings all the top bloggers post under a single umbrella.

The purpose of this blog, Follow the top Salesforce bloggers and collect all blogs in a single place through automation.

============================

Post a Comment